In this notebook, we’re going to take the [dataset we prepared] and continue to iterate on the modeling. Last time we built a model using TensorFlow and Xception. This time, we’re going to iterate on that using FastAI.

Note: This notebook has been rewritten since FastAI has changed so much. See below for the most recent version it was run with.

import fastai

from fastai.data.all import *

from fastai.vision.all import *

fastai.__version__

'2.5.3'

First, we need to create a DataBlock. Please see my FastAI Data Tutorial - Image Classification for details on this process.

dblock = DataBlock(blocks = (ImageBlock, CategoryBlock),

get_items = get_image_files,

get_y = parent_label,

splitter = GrandparentSplitter('train', 'val'),

item_tfms = Resize(224))

We need to point to the image root path.

if sys.platform == 'linux':

path = Path(r'/home/julius/data/WallabiesAndRoosFullSize')

else:

path = Path(r'E:/Data/Raw/WallabiesAndRoosFullSize')

dls = dblock.dataloaders(path)

Let’s see how many items we have in each set.

len(dls.train.items), len(dls.valid.items)

(3653, 567)

Let’s look at some example images to make sure everything is correct.

dls.train.show_batch(max_n=4, nrows=1)

FastAI provides some standard transforms that work quite well, so we’ll use them.

dblock = dblock.new(item_tfms=Resize(128, ResizeMethod.Pad, pad_mode='zeros'), batch_tfms=aug_transforms(mult=2))

dls = dblock.dataloaders(path)

dls.valid.show_batch(max_n=4, nrows=1)

/home/julius/miniconda3/envs/fai/lib/python3.8/site-packages/torch/_tensor.py:1023: UserWarning: torch.solve is deprecated in favor of torch.linalg.solveand will be removed in a future PyTorch release.

torch.linalg.solve has its arguments reversed and does not return the LU factorization.

To get the LU factorization see torch.lu, which can be used with torch.lu_solve or torch.lu_unpack.

X = torch.solve(B, A).solution

should be replaced with

X = torch.linalg.solve(A, B) (Triggered internally at /opt/conda/conda-bld/pytorch_1623448234945/work/aten/src/ATen/native/BatchLinearAlgebra.cpp:760.)

ret = func(*args, **kwargs)

learn = cnn_learner(dls, resnet18, metrics=error_rate)

learn.fine_tune(4)

/home/julius/miniconda3/envs/fai/lib/python3.8/site-packages/torch/nn/functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at /opt/conda/conda-bld/pytorch_1623448234945/work/c10/core/TensorImpl.h:1156.)

return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

| epoch | train_loss | valid_loss | error_rate | time |

|---|---|---|---|---|

| 0 | 0.720236 | 0.259093 | 0.104056 | 02:09 |

| epoch | train_loss | valid_loss | error_rate | time |

|---|---|---|---|---|

| 0 | 0.339751 | 0.301955 | 0.141093 | 02:07 |

| 1 | 0.225766 | 0.444917 | 0.105820 | 02:08 |

| 2 | 0.146328 | 0.176450 | 0.095238 | 02:08 |

| 3 | 0.109386 | 0.162337 | 0.091711 | 02:06 |

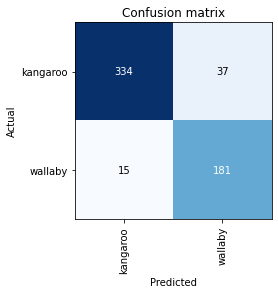

The error_rate pertains to the validation set, showing that we got about 91%. Let’s look at a confusion matrix.

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()