In this tutorial, I will be looking at how to prepare a semantic segmentation dataset for use with FastAI. I will be using the Chest X-Ray Images (Pneumonia) dataset from Kaggle as an example. This post focuses on the components that are specific to semantic segmentation. To see tricks and tips for using FastAI with data in general, see my FastAI Data Tutorial - Image Classification.

Table of Contents

from fastai.vision.all import *

path = Path('/home/julius/data/kaggle/lgg-mri-segmentation/kaggle_3m/')

def get_images(path):

all_files = get_image_files(path)

images = [i for i in all_files if 'mask' not in str(i)]

return images

def get_label(im_path):

return im_path.parent / (im_path.stem + '_mask' + im_path.suffix)

all_images = get_images(path)

examp_im_path = all_images[0]

examp_im_path

Path('/home/julius/data/kaggle/lgg-mri-segmentation/kaggle_3m/TCGA_DU_7309_19960831/TCGA_DU_7309_19960831_21.tif')

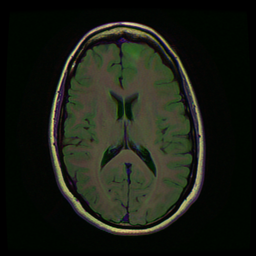

im = PILImage.create(examp_im_path)

im

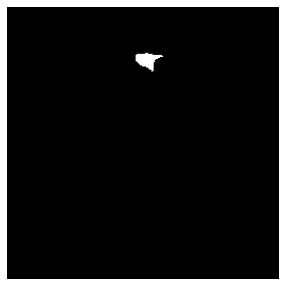

mask = PILImage.create(get_label(examp_im_path))

mask.show(figsize=(5,5), alpha=1)

<AxesSubplot:>

mask.shape

(256, 256)

codes = ['n', 'y']

bs=16

Now we determine which blocks to use. For segmentation tasks, we’ll generally use MaskBlock.

blocks = (ImageBlock, MaskBlock(codes))

Now we create the DataBlock.

dblock = DataBlock(blocks = blocks,

get_items = get_images,

get_y = get_label,

splitter = RandomSplitter(),

item_tfms = Resize(224))

dblock.summary(path)

Setting-up type transforms pipelines

Collecting items from /home/julius/data/kaggle/lgg-mri-segmentation/kaggle_3m

Found 3929 items

2 datasets of sizes 3144,785

Setting up Pipeline: PILBase.create

Setting up Pipeline: get_label -> PILBase.create

Setting up Pipeline: PILBase.create

Setting up Pipeline: get_label -> PILBase.create

Building one sample

Pipeline: PILBase.create

starting from

/home/julius/data/kaggle/lgg-mri-segmentation/kaggle_3m/TCGA_DU_7304_19930325/TCGA_DU_7304_19930325_14.tif

applying PILBase.create gives

PILImage mode=RGB size=256x256

Pipeline: get_label -> PILBase.create

starting from

/home/julius/data/kaggle/lgg-mri-segmentation/kaggle_3m/TCGA_DU_7304_19930325/TCGA_DU_7304_19930325_14.tif

applying get_label gives

/home/julius/data/kaggle/lgg-mri-segmentation/kaggle_3m/TCGA_DU_7304_19930325/TCGA_DU_7304_19930325_14_mask.tif

applying PILBase.create gives

PILMask mode=L size=256x256

Final sample: (PILImage mode=RGB size=256x256, PILMask mode=L size=256x256)

Collecting items from /home/julius/data/kaggle/lgg-mri-segmentation/kaggle_3m

Found 3929 items

2 datasets of sizes 3144,785

Setting up Pipeline: PILBase.create

Setting up Pipeline: get_label -> PILBase.create

Setting up Pipeline: PILBase.create

Setting up Pipeline: get_label -> PILBase.create

Setting up after_item: Pipeline: AddMaskCodes -> Resize -- {'size': (224, 224), 'method': 'crop', 'pad_mode': 'reflection', 'resamples': (2, 0), 'p': 1.0} -> ToTensor

Setting up before_batch: Pipeline:

Setting up after_batch: Pipeline: IntToFloatTensor -- {'div': 255.0, 'div_mask': 1}

Building one batch

Applying item_tfms to the first sample:

Pipeline: AddMaskCodes -> Resize -- {'size': (224, 224), 'method': 'crop', 'pad_mode': 'reflection', 'resamples': (2, 0), 'p': 1.0} -> ToTensor

starting from

(PILImage mode=RGB size=256x256, PILMask mode=L size=256x256)

applying AddMaskCodes gives

(PILImage mode=RGB size=256x256, PILMask mode=L size=256x256)

applying Resize -- {'size': (224, 224), 'method': 'crop', 'pad_mode': 'reflection', 'resamples': (2, 0), 'p': 1.0} gives

(PILImage mode=RGB size=224x224, PILMask mode=L size=224x224)

applying ToTensor gives

(TensorImage of size 3x224x224, TensorMask of size 224x224)

Adding the next 3 samples

No before_batch transform to apply

Collating items in a batch

Applying batch_tfms to the batch built

Pipeline: IntToFloatTensor -- {'div': 255.0, 'div_mask': 1}

starting from

(TensorImage of size 4x3x224x224, TensorMask of size 4x224x224)

applying IntToFloatTensor -- {'div': 255.0, 'div_mask': 1} gives

(TensorImage of size 4x3x224x224, TensorMask of size 4x224x224)

/home/julius/miniconda3/envs/fai/lib/python3.8/site-packages/torch/_tensor.py:575: UserWarning: floor_divide is deprecated, and will be removed in a future version of pytorch. It currently rounds toward 0 (like the 'trunc' function NOT 'floor'). This results in incorrect rounding for negative values.

To keep the current behavior, use torch.div(a, b, rounding_mode='trunc'), or for actual floor division, use torch.div(a, b, rounding_mode='floor'). (Triggered internally at /opt/conda/conda-bld/pytorch_1623448234945/work/aten/src/ATen/native/BinaryOps.cpp:467.)

return torch.floor_divide(self, other)

dls = dblock.dataloaders(path)

dls.train.show_batch(max_n=4, nrows=1)