In this tutorial, I will be looking at how to prepare an object detection dataset for use with PyTorch and FastAI. I will be using the DOTA dataset as an example. I will prepare the same data for both PyTorch and FastAI to illustrate the differences. This post focuses on the components that are specific to object detection. To see tricks and tips for using FastAI with data in general, see my FastAI Data Tutorial - Image Classification.

from fastai.data.all import *

from fastai.vision.all import *

from torchvision.datasets.vision import VisionDataset

from pycocotools.coco import COCO

from pyxtend import struct # pyxtend is available on pypi

DOTA - PyTorch

PyTorch Datasets are SUPER simple. So simple that they don’t actually do anything. It’s just a format. You can see the code here, but basically the only thing that makes something a PyTorch Dataset is that it has a __getitem__ method. This gives us incredible flexibility, but the lack of structure can also be difficult at first. For example, it’s not even clear what data type __getitem__ should return. Although it’s commonly a tuple, sometimes returning a dictionary can be useful too.

class DOTADataset(VisionDataset):

"""

Is there a separate dataset for train, test, and val?

"""

def __init__(self, image_root, annotations, transforms=None):

super().__init__(image_root, annotations, transforms)

#self.root = image_root don't need this cause super?

self.coco = COCO(annotations)

self.transforms = transforms

self.ids = list(sorted(self.coco.imgs.keys()))

def __getitem__(self, index):

coco = self.coco

img_id = self.ids[index]

ann_ids = coco.getAnnIds(imgIds=img_id)

target = coco.loadAnns(ann_ids)

path = coco.loadImgs(img_id)[0]['file_name']

img = Image.open(os.path.join(self.root, path)).convert('RGB')

# don't want to return a pil image

img = np.array(img)

if self.transforms is not None:

img, target = self.transforms(img, target)

return img, target

def __len__(self):

return len(self.ids)

dota_path = Path(r'E:\Data\Processed\DOTACOCO')

dota_train_annotations = dota_path / 'dota2coco_train.json'

dota_train_images = Path(r'E:\Data\Raw\DOTA\train\images\all\images')

dota_dataset = DOTADataset(dota_train_images, dota_train_annotations)

loading annotations into memory...

Done (t=1.47s)

creating index...

index created!

Because it’s a VisionDataset, we have a nice repr response.

dota_dataset

Dataset DOTADataset

Number of datapoints: 1411

Root location: E:\Data\Raw\DOTA\train\images\all\images

It’s easy to plot the images.

plt.imshow(dota_dataset[0][0])

<matplotlib.image.AxesImage at 0x2388054dac0>

Let’s build a simple way to look at images with labels.

def show_annotations(image, annotations, figsize=(20,20), axis_off=True):

plt.figure(figsize=figsize)

plt.imshow(image)

if axis_off:

plt.axis('off')

coco.showAnns(annotations)

coco = dota_dataset.coco

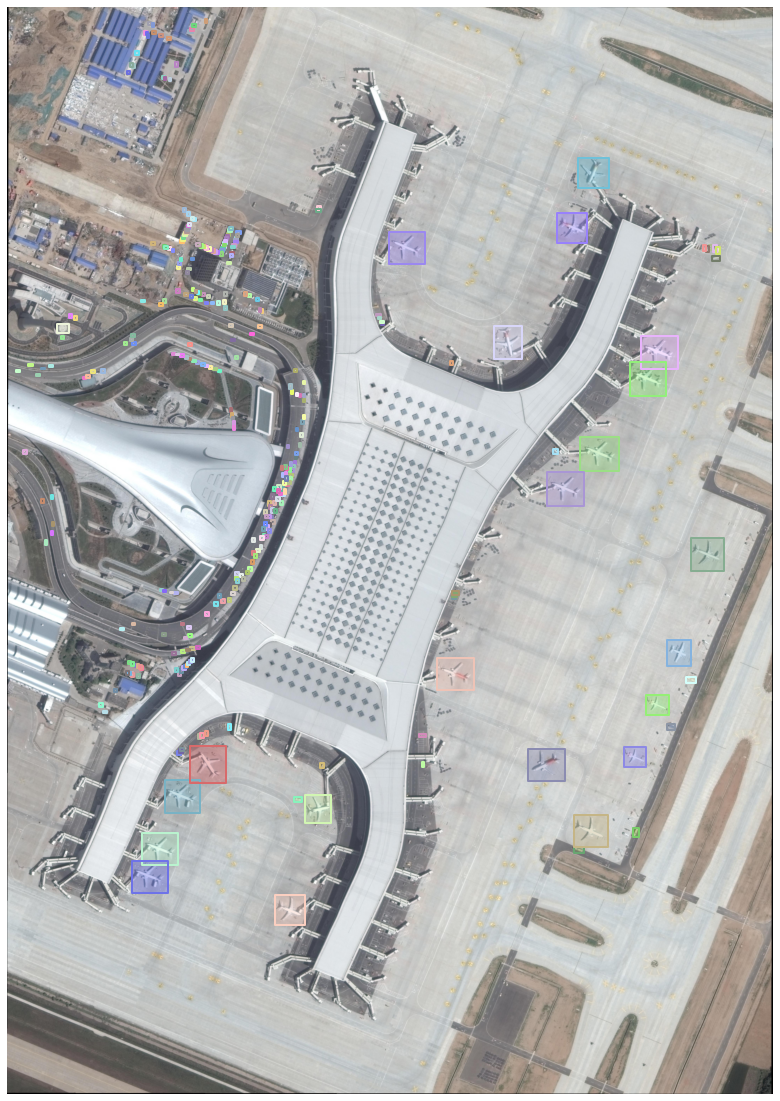

show_annotations(*dota_dataset[0])

DOTA - FastAI

OK, we’ve got the PyTorch part working. Now let’s plug it into FastAI

We need a way to get the images for the respective blocks. This will be a list of three functions, like so:

imgs, lbl_bbox = get_annotations(dota_train_annotations)

imgs[:5]

['P0000.png', 'P0001.png', 'P0002.png', 'P0005.png', 'P0008.png']

lbl_bbox contains lots of elements, so let’s take a look at the structure of it.

struct(lbl_bbox)

{list: [{tuple: [{list: [{list: [float, float, float, '...4 total']},

{list: [float, float, float, '...4 total']},

{list: [float, float, float, '...4 total']},

'...323 total']},

{list: [str, str, str, '...323 total']}]},

{tuple: [{list: [{list: [float, float, float, '...4 total']},

{list: [float, float, float, '...4 total']},

{list: [float, float, float, '...4 total']},

'...40 total']},

{list: [str, str, str, '...40 total']}]},

{tuple: [{list: [{list: [float, float, float, '...4 total']},

{list: [float, float, float, '...4 total']},

{list: [float, float, float, '...4 total']},

'...288 total']},

{list: [str, str, str, '...288 total']}]},

'...1410 total']}

Now we need a function to pass to get_items inside the datablock. Because we already have a list of all the items, all we need to do is write a function that returns that list.

def get_train_imgs(noop):

return imgs

Given an we need to get the correct annotation. Fortunately, we can look it up in a dictionary.

img2bbox = dict(zip(imgs, lbl_bbox))

Now, we put all that together in our getters.

getters = [lambda o: dota_train_images/o,

lambda o: img2bbox[o][0],

lambda o: img2bbox[o][1]]

We can add any transforms we want.

item_tfms = [Resize(128, method='pad'),]

batch_tfms = [Rotate(), Flip(), Normalize.from_stats(*imagenet_stats)]

Now, we turn it into a DataBlock.

dota_dblock = DataBlock(blocks=(ImageBlock, BBoxBlock, BBoxLblBlock),

splitter=RandomSplitter(),

get_items=get_train_imgs,

getters=getters,

item_tfms=item_tfms,

batch_tfms=batch_tfms,

n_inp=1)

From from there we create our DataLoaders.

dls = dota_dblock.dataloaders(dota_train_images)

Due to IPython and Windows limitation, python multiprocessing isn't available now.

So `number_workers` is changed to 0 to avoid getting stuck

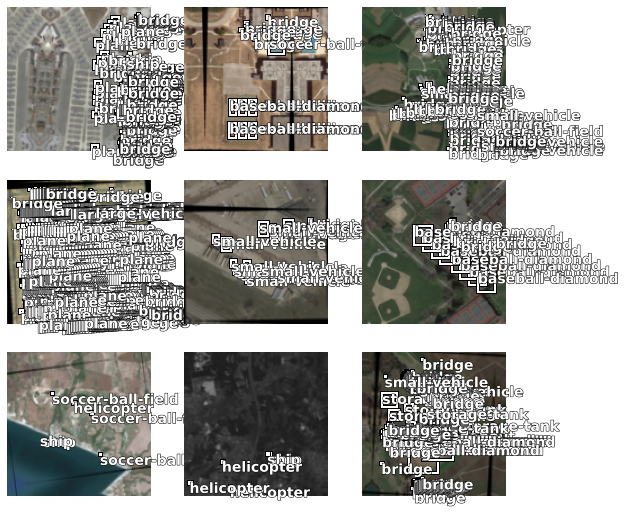

As you can see, the show_batch method doesn’t work as well with many labels, as is often the case with aerial imagery. However, you can see use it to get a general sense.

dls.show_batch()

That’s all there is to it!