This post shows how to load and evaluate the model we built in the previous post.

import dill

from siam_utils import SiameseImage

from sklearn.metrics import accuracy_score

from fastai.vision.all import *

learner = load_learner(Path(os.getenv("MODELS")) / "siam_catsvdogs_tutorial.pkl", cpu=False, pickle_module=dill)

path = untar_data(URLs.PETS)

files = get_image_files(path / "images")

imgval = PILImage.create(files[0])

imgtest = PILImage.create(files[1])

siamtest = SiameseImage(imgval, imgtest)

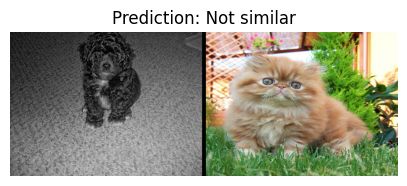

siamtest.show();

@patch

def siampredict(self: Learner, item, rm_type_tfms=None, with_input=False):

res = self.predict(item, rm_type_tfms=None, with_input=False)

if res[0] == tensor(0):

SiameseImage(item[0], item[1], "Prediction: Not similar").show()

else:

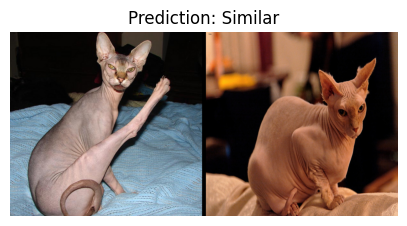

SiameseImage(item[0], item[1], "Prediction: Similar").show()

return res

res = learner.siampredict(siamtest)

imgval = PILImage.create(files[9])

imgtest = PILImage.create(files[35])

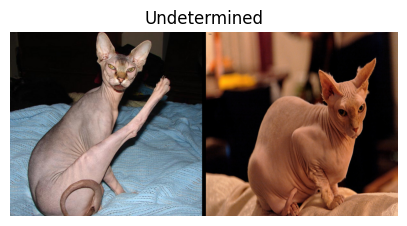

siamtest = SiameseImage(imgval, imgtest)

siamtest.show();

res = learner.siampredict(siamtest)

Let’s see how well it does overall.

test_dl = learner.dls.test_dl(files)

preds, ground_truth = learner.get_preds(dl=test_dl)

round(accuracy_score(ground_truth, torch.argmax(preds, dim=1)), 4)

0.9306